The Connectionist Approach [notes on cognitive science]

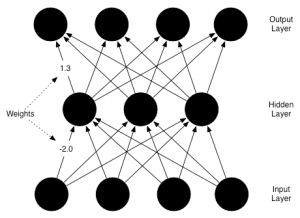

On the book Rethinking Innateness, by Elman et al, we’re given an introduction to the advantages of connectionism as an explanative model of human development and language acquisition. Connnectionist models are usually built up of a number of nodes interconnected by communication channels. These nodes recieve biased activation and depending on their internal threshold function, they send an activation themselves. Nodes can be connected and arranged in an innumerable amount of structures, from a simple layered network to incredibly complex multi-modular structures. The connections between the nodes have weights and it is there where the knowledge of the network is stored.

These weights are always real valued and they alter the input value by multiplying it by their own value. So if a node with a weight of -2.0 receives an input of 0.5, the resulting output would be -1.0, an inhibitory signal. (if the result was positive, the signal would be excitatory. We call the net input of any given node the sum over all input nodes of the products of the activation and the weight of the input. Now the node has an internal response function that will output a value according to the net input. This is usually a threshold function, which will output on the range of 0 to 1, in relation to the input. The most commonly used are the sigmoid function, or the logistic function. For very large inputs, they will output 1, for very small (negative) inputs, they will ouput 0. When the input is close to 0, the output will be in the range of 0 to 1. Changing the threshold function will give us either more sensitive thresholds or more abrupt ones. The «magic» of these systems is that their nonlinear response allows for fine-grained distinctions of categories that are continuous in nature. In practice however, we usually regard the outputs as zero or one. (more on this later). Bias nodes are always on, and they allow us to set the default behavior of other nodes in the absence of input.

This is usually a threshold function, which will output on the range of 0 to 1, in relation to the input. The most commonly used are the sigmoid function, or the logistic function. For very large inputs, they will output 1, for very small (negative) inputs, they will ouput 0. When the input is close to 0, the output will be in the range of 0 to 1. Changing the threshold function will give us either more sensitive thresholds or more abrupt ones. The «magic» of these systems is that their nonlinear response allows for fine-grained distinctions of categories that are continuous in nature. In practice however, we usually regard the outputs as zero or one. (more on this later). Bias nodes are always on, and they allow us to set the default behavior of other nodes in the absence of input.

It is easy to see how to construct networks that implement logic functions, such as AND. Two input nodes connected to an output node, with sufficiently large weights so that the net input is large enough to activate the output. A negative bias on the output makes sure that no input by itself will activate the output.

The architecture of a network is a central part to what the structure knows. The common approach to architecture design is to follow theorethical claims of what we’re modelling. We create nodes and connections according to what we want our model to account for. McClelland and Rumelhart created a network for word perception with three layers, each for feature level, letter level and finally word level recognition. The behavior of these systems is sometimes quite unexpected, and provides predictions about human behavior that can be tested experimentally.

There are ways in which networks can configure themselves with the appropriate connections for a given task. This in principle will allow us to use them in order to develop theories. The earliest idea on how to make networks learn comes from Donald Hebb. He suggested that learning is driven by correlated activity, and a mathematical representation might be: Where the first term represents the change in weight of the connection between ij, eta is the learning rate and ai and aj are the activation of the two nodes. This view has support from biological mechanisms that implement something very similar. The system will be then just looking for patterns, without knowing anything in advance. However, Hebbian learning can only notice pair-wise correlations, and sometimes these just don’t cut it. To overcome this, we use the Perceptron Convergence Procedure.

Where the first term represents the change in weight of the connection between ij, eta is the learning rate and ai and aj are the activation of the two nodes. This view has support from biological mechanisms that implement something very similar. The system will be then just looking for patterns, without knowing anything in advance. However, Hebbian learning can only notice pair-wise correlations, and sometimes these just don’t cut it. To overcome this, we use the Perceptron Convergence Procedure.

We can base learning on the difference between actual and desired output of a system. We initialise the weights randomly, and with the help of a training set we learn the desired output values. We present one target pattern, and we compare the output with the target output. This discrepancy is the error in output, and we adjust the weights proportionally to it. We want to minimize error until we obtain a network that generalises. This learning procedure only works for 2 layer networks, and Minsky and Papert showed that these nets cannot solve non-linearly separable problems such as the logic (XOR) exclusive-or.

Neural nets are a kind of analogy engine in that they are able to generalize their behaviors beyond the training cases, based on the similarity principle, where similar inputs yield similar outputs. However, Similarity is only one source of information, and whilst it might serve well as a starting point, it might lead us to pitfalls. I.e. how do you classify pets vs wild animals? PCP is guaranteed to find the set of weights that find the right response to novel inputs, as long as the classification is based on physical features. PCP is guaranteed to fail if the correct response cannot be defined in terms of similarity.

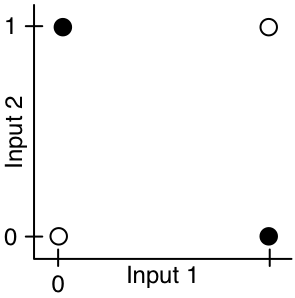

Let us go back to the XOR function. This cannot be learnt by a 2 layer network because it isn’t linearly separable. If we represent the input space of zeros and ones into a 2D representation, as in the figure on the left, we can see that the black dots are the input configurations where we want the network to output a 1, and the empty dots are the configurations were we want a zero. So exclusive or must only yield one when either of the inputs is on, but zero when they’re both off or on.

Let us go back to the XOR function. This cannot be learnt by a 2 layer network because it isn’t linearly separable. If we represent the input space of zeros and ones into a 2D representation, as in the figure on the left, we can see that the black dots are the input configurations where we want the network to output a 1, and the empty dots are the configurations were we want a zero. So exclusive or must only yield one when either of the inputs is on, but zero when they’re both off or on.

Now only the pairs that are furthest appart in our input space, (0,0) (1,1) and (1,0) (0,1); are the ones that we need to group. The problem lies in the fact that these aren’t linearly separable. This means that there is no straight line to be drawn in this space such that the two groups will be divided. We call this the decision line and when a problem cannot be identified by one such lines, we call it nonlinearly separable.

In order to solve the nonlinearity problem, we must allow for internal representations. These arise with the introduction of a hidden layer to our architecture, called so because they do not recieve input from the world. These are somewhat equivalent to the phsychological conception of internal representations. We can draw this analogy further by calling the input units sensors and the output units effectors. This will not be the case in many situations, but its a good enough approximation. This hidden layer allows the network to trascend physical similarity, by transforming input representations in to a more abstrad kind of representation. The hidden layer, on the XOR problem, transforms the input into a linearly separable one, and allows the ouput unit to solve the problem correctly. When we say ‘solve’ however, we just mean that «there exists a set of weights such that the network can produce the right output for any input. However, these new structures require a new learning paradigm, called backpropagation of error.

The need for backprop arises from the fact that it is not straightforward to see what the error measure of the hidden layer can be measured. In order to do so, we follow these steps. First, we compare the calculate the error of the output layer. Then, for each node, we calculate what the output should have been, applying a scaling factor, thus arriving to the local error. We adjust the weights of each node to reduce the local error, assigning »blame» to the nodes of the previous label according to the strenght of their weights. We then repeat this on the previous level, using each level’s blame as its error.

All in all we’ve introduced the concept of neural networks as sets of nodes connected by unidirectional weighted nodes. We’ve explored the notion of linear separability and the class of problems that can be solved by a simple 2-ply neural network. We mentioned Hebbian and PCP learning and pointed out their shortcomings. We saw how XOR is of the class of nonlinearly separable problems and we introduced the concept of Hidden Layers as a solution to this. Finally, Backpropagation was introduced as a method to train networks with hidden layer, by propagating ‘blame’ onto the nodes with the strongest weight settings.

Filed under: Cerebros | Leave a Comment

No Responses Yet to “The Connectionist Approach [notes on cognitive science]”